Business Context

I joined this Air Force contract 9 months into development, where the data science team had built impressive AI models but hadn't yet connected them to maintainer workflows. As Lead UX Designer, I partnered with product and data scientists to merge that work with user reality. Through research across 3 squadrons, we discovered maintainers needed something different than initially assumed, not initially predictive recommendations, but natural language search and navigation through technical manuals. My design work translated these insights into interfaces that merged our AI capabilities with operational needs, helping the team pivot toward a solution that ultimately secured ATO approval and reduced troubleshooting time by 70%.

Understanding the Problem & Research

In U.S. Air Force maintenance operations, technicians spend 30-120 minutes per troubleshooting job manually navigating complex PDF documentation—a time-intensive "hunting and gathering" approach creating significant delays in safety-critical aircraft maintenance.

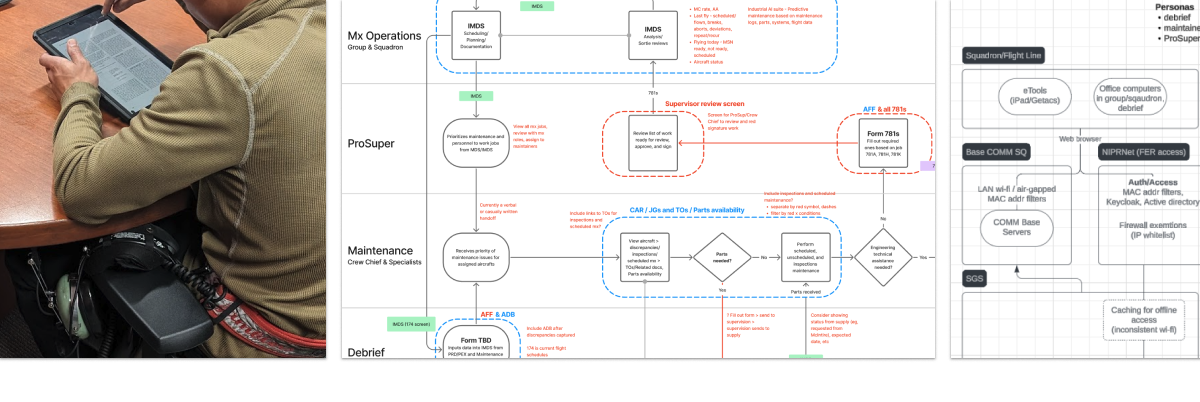

We conducted 60+ hours of field research across 3 squadrons, observing 30+ maintainers through job shadowing, contextual interviews, and think-aloud protocols. Documentation revealed an average of 7 system changes per maintenance task across flight line operations, debrief personnel, and technical document management teams.

Key Findings

Our research identified three critical user types: Maintenance Technicians performing hands-on work, Less Experienced Maintainers needing guidance, and Debriefers translating pilot reports into fault codes.

The real problem wasn't that technicians lacked technical knowledge. They were spending most of their time hunting for 6-digit fault codes scattered across multiple PDF manuals. For every issue, technicians bounced between fault isolation guides, job guides, wiring diagrams, and illustrated parts breakdowns, often checking 5-10 different documents. These PDFs had no links between related procedures, so maintainers had to either memorize document structures or rely on what more experienced colleagues had taught them. Junior technicians took three times longer because they didn't know which manual would have the answer or how different sections connected. The safety-first culture demanded strict protocol compliance, and flight line connectivity issues meant everything had to work offline.

Design Evolution & Solution

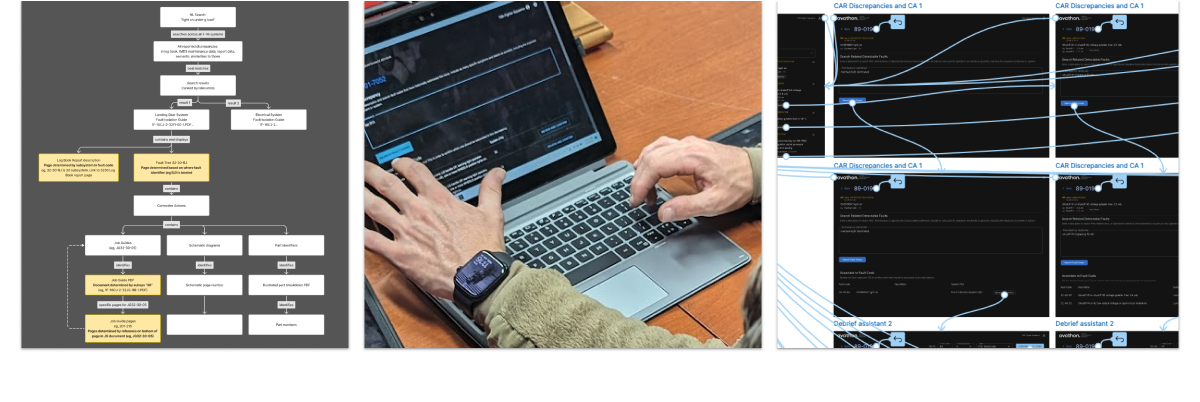

I built and tested multiple prototypes, starting with simple wireframes and working up to working applications with real data. Each version taught us something important about what technicians actually needed.

Design Iterations

Our initial concepts explored data-rich dashboards with predictive recommendations, envisioning comprehensive maintenance insights. However, without access to classified systems like IMDS, we learned to work within available resources—focusing on technical documentation we could actually access.

We then explored predictive AI capabilities that could recommend corrective actions based on symptom patterns. Through stakeholder workshops and testing, we discovered this approach conflicted with essential safety protocols. Maintenance leadership helped us understand their reality: "We have to follow fault isolation protocols." This insight shifted our thinking—the AI should accelerate finding procedures, not bypass them.

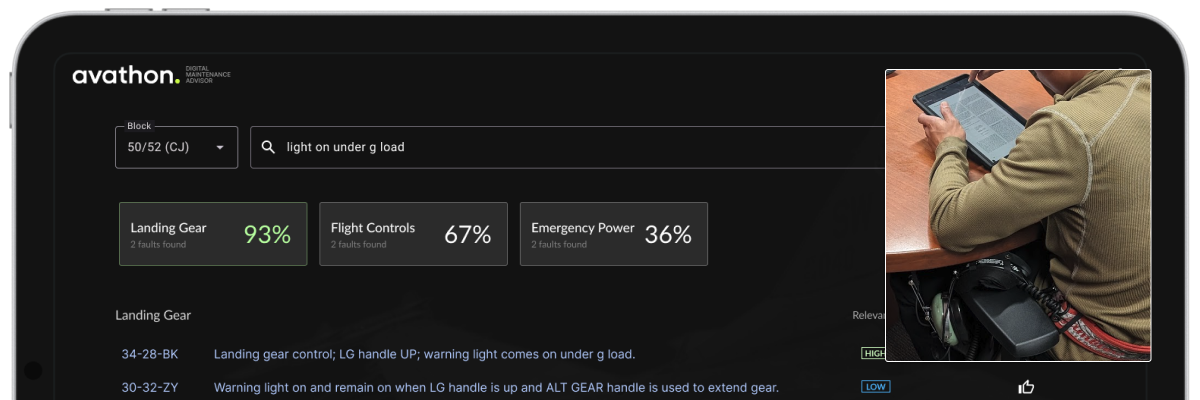

Through continuous testing and refinement, our design evolved from complex multi-panel dashboards toward increasingly focused interfaces. Each iteration taught us that technicians valued speed and accuracy over feature richness. The breakthrough came when we reframed the AI's role as an intelligent guide through existing procedures rather than a system trying to replace established expertise.

Final Solution

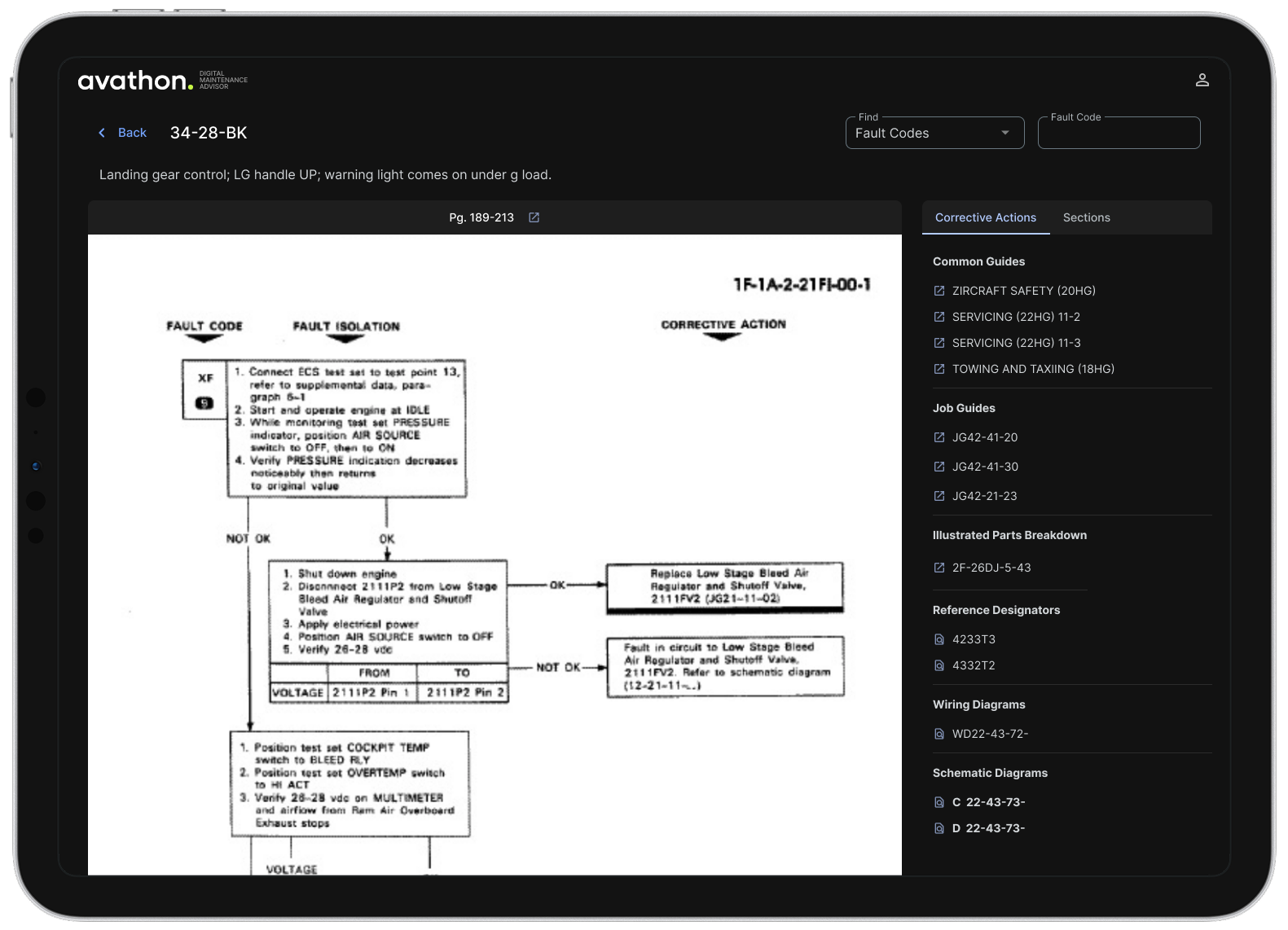

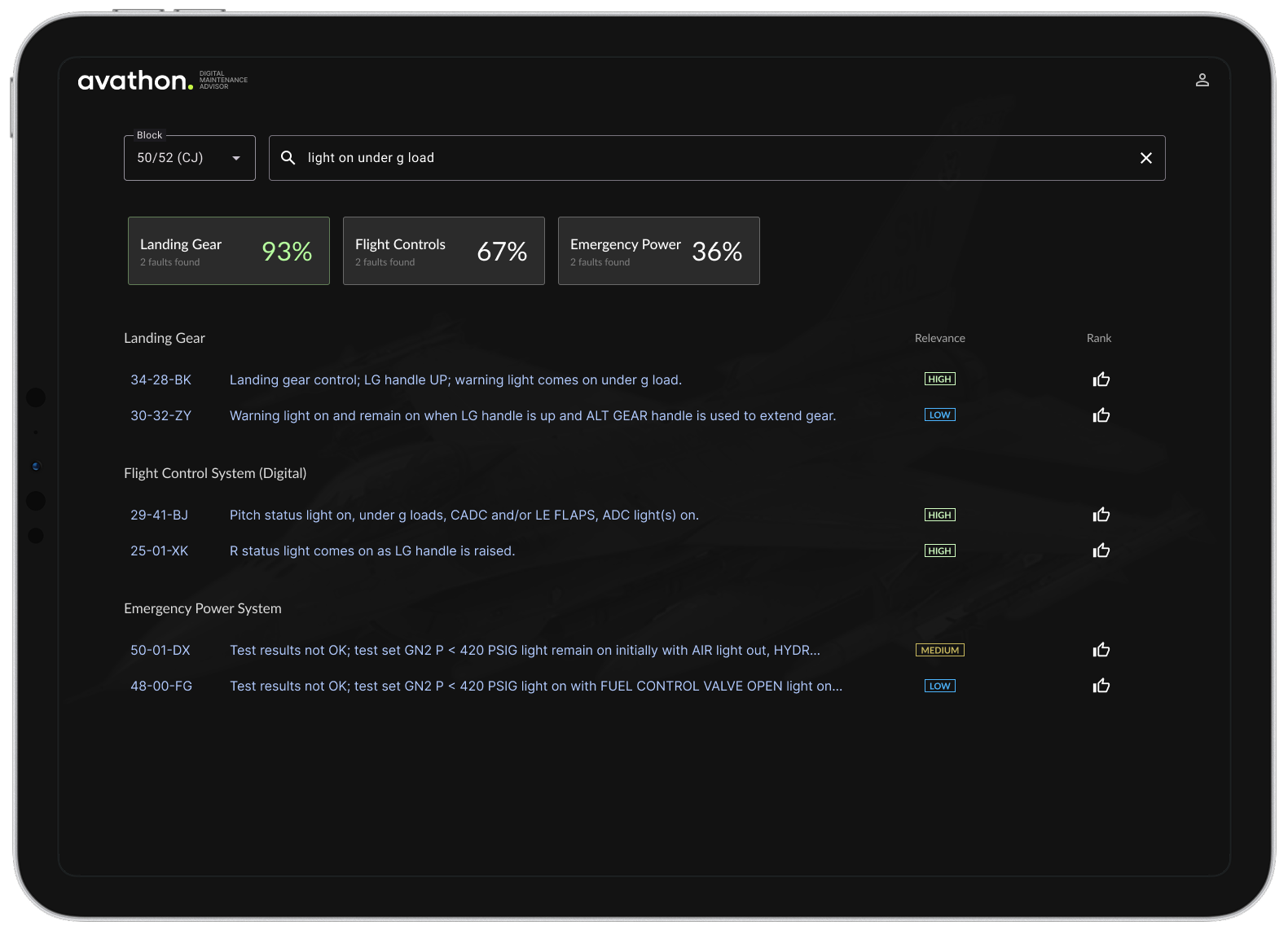

The solution enables technicians to "write how they speak" to describe aircraft discrepancies and receive instant access to relevant technical documentation—intelligent navigation, not decision automation.

What we built

- Natural language search: Type "engine won't start when hot" and get relevant procedures, no technical jargon required

- Connected documentation: Links between related procedures that were previously scattered across different manuals

- Fast results: Cut search time from 30 seconds to 5 seconds by understanding what technicians really meant

- Works offline: Full functionality on the flight line where internet is spotty

- Scales with complexity: Quick answers for routine issues, detailed guidance when things get complicated

The system works like having an experienced technician nearby who knows where everything is in the manuals. It saves time finding information while respecting the Air Force's careful, safety-focused approach to maintenance.

Results and Impact

Quantified Outcomes

- 30% faster fault code identification validated through comparative usability testing

- 70% reduction in troubleshooting time, from 30-120 minutes down to under 10 minutes

- Government ATO approval achieved for potential fleet-wide deployment

Strategic Value

Nine months into this $2M+ Air Force contract, we successfully pivoted the project into a validated AI solution for safety-critical environments. Our research revealed the real problem: maintainers were spending hours manually searching through PDFs just to find 6-digit fault codes and locate the right starting point in fault isolation procedures.

The solution resonated with leadership, who told us: "Sometimes it feels like our words fall upon deaf ears but I see action here." Field supervisors reported the system "turns our newest technician into our most efficient," all while maintaining the strict safety protocols and regulatory compliance that define Air Force operations.

Key Learnings

- Field research is everything in government environments. The initial AI models completely missed what users actually needed. It took 60+ hours of observation and interviews to understand the real workflow problems.

- Cross-functional collaboration means truly bridging worlds. User insights have to directly shape how ML models get trained and what data gets prioritized, not just inform interface design.

- Technical constraints can make better designs. The offline requirement forced us to build a more robust architecture. Safety compliance requirements, rather than limiting us, actually increased user trust and adoption.

This project taught me that in complex, regulated environments, the best UX isn't about innovation for its own sake. It's about deeply respecting existing expertise while removing unnecessary friction. Sometimes the most powerful design decision is recognizing what already works and leaving it alone, focusing instead on eliminating the real pain points.